After a cursory look at the Fund for Peace’s (hereafter FFP) 2014 fragile state rankings, @ACLEDInfo mentioned on twitter that the metrics were ‘questionable’. We suggested index’s rankings of fragility bear little relationship to reality. A polite reply from the FFP opened the space for more discussion. Since a complete conversation is not ‘tweetable’, this piece summarizes how we consider any failed/fragile state index to be a largely futile, and empirically questionable, exercise.

In the FFP’s tenth index on state failure and fragility, Chad in more ‘fragile’ than Pakistan, Zimbabwe than Nigeria, Malawi than Libya and Kenya over Mali. Perhaps the Malawi-Libya comparison is the most egregious, and indicates the distance between an intuitive understanding of what state fragility is, and how it is expressed in indices such as the FFP’s. In 2013 and into 2014, Malawi underwent a corruption scandal; a hotly contested, yet largely nonviolent, election; and a transition of power between parties as a result of the 2014 election. Similar to other sub-Saharan states, it has pockets of deepening poverty, high population and climate vulnerability. Yet overall, it is one of the least violent states across Africa and indeed the developing world. On the other hand, Libya is still undergoing a violent and unstable transition from autocracy to democracy.

Benghazi was one of the most violent hotspots in Africa in 2013; over a dozen discrete violent groups are operating within the state with the aim of destabilizing the post-Quadaffi government apparatus; the government is holding together a weak, fragmented and persistently attacked alliance. Battles and violence against civilians characterize the violence within the state, compared to the high rate of intermittent riots and largely non-violent protests that occur in Morocco and Tunisia. Libya has a development level that is higher than African averages, the variation in public good access and political stability throughout the state is also high. To place Malawi as more ‘fragile’ than Libya (and other comparisons mentioned above) is to fundamentally misunderstand how a state apparatus is made vulnerable to and by internal dissent, poverty and political institutions.

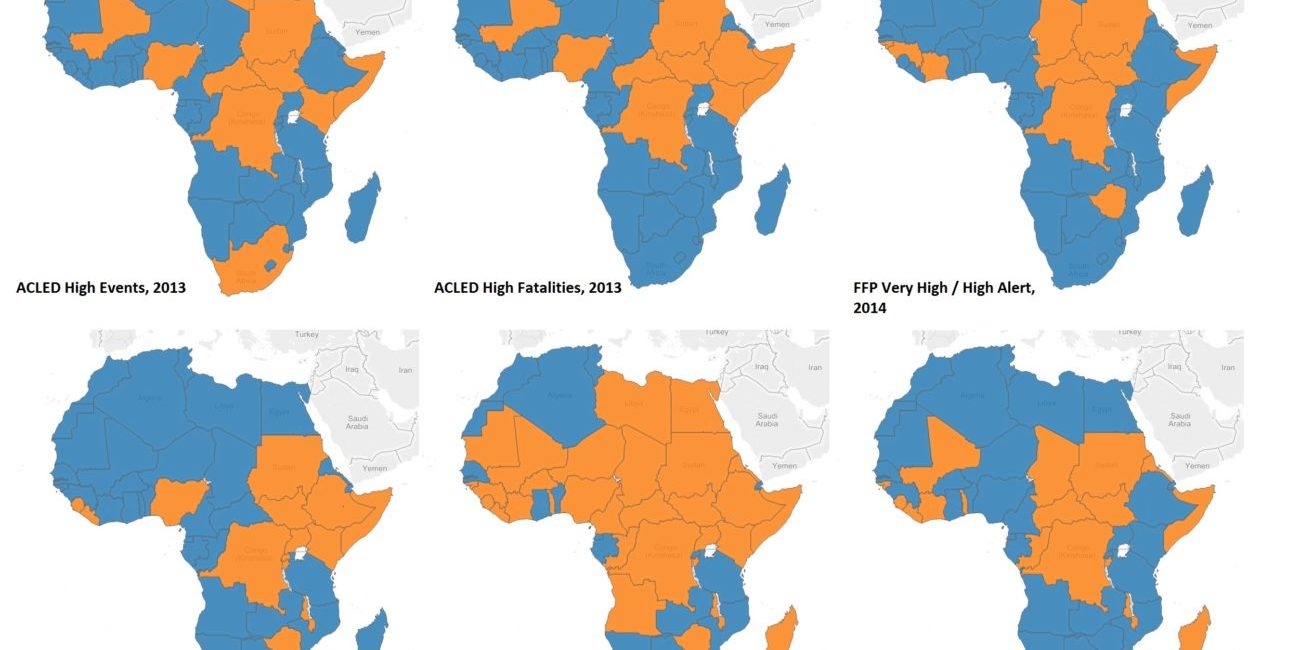

FFP’s index is the subject of this piece, but multiple fragility indices commit many of the same errors, and can also differ in which countries are considered fragile. The figure below displays how African countries have fared in recent indices, compared to overall event and fatality counts from ACLED data (excluding non-violent events and peaceful protests). There is limited agreement on what constitutes ‘fragility’, largely due to vague, contradictory, and tautological definitions and necessary and sufficient conditions.

FFP rankings are based on a series of twelve indicators; each a compilation of over 100 initial sub indicators, whittled down to fourteen sub-indicators using a factor analysis. Data for the indicators and sub-indicators are from three main data sources and aggregated through the FFP’s CAST- Conflict Assessment System Tool. Therefore, each of the selected twelve indicators (demographic pressure, refugees and IDPs, group grievances, brain drain/human flight, uneven economic development, economic pressures, state legitimacy, public services, human rights and the rule of law, security apparatus, factionalized elites and external intervention) are based on several sub-indicators that may- or may not (it is not mentioned)- be measured and aggregated equally to create a score by which countries are relatively ranked.

Our issues with the index are three-fold: the composite indicators and their inherent endogeneity; how and why different indicators are weighted the way they are; and the meaning of fragility and failure and its consequences. While there is a small academic cottage industry devoted to dismantling the concept of state failure and fragility as a useful heuristic (some excellent pieces include that by Putzel and Di John, 2012) there is somewhat less material questioning how measures should be compiled, if at all, and consequences of ‘bad’ rankings on states.

The measures

Policy communities often appreciate a comparable assessment, or relative measure, in order to prioritize and classify crises. However, measurements can also obscure as much as they elucidate about the relative state of crises. This is particularly true when criteria used to measure distinct indicators are similar, the exact same or endogenous. By integrating factors that are similar, aggregated indices can ‘double count’ and miscount, leading to poor and corrupted relative measures.

The composite indicators, as described by the methodology section available at http://ffp.statesindex.org/methodology, include sub-measures that consistently overlap; these include the constituent sub-indicators for economic development and poverty/economic decline or state legitimacy and public services; sub-indicators including multiple measures of ‘elections’ and ‘electoral institutions’, ‘power struggles’ and ‘political competition’, ‘housing access’, ‘civil rights’ and ‘systematic violation of rights’. Other indicators are the exact same and used in several indices: violence and harassment is captured in multiple ways by the ‘group grievance’ indicator, but also by ‘state legitimacy’, ‘human rights and the rule of law’, and security apparatus. ‘Infant mortality’ is used to measure ‘demographic pressures’ and ‘public services’.

Other indicators and sub-indicators are endogenous: endogeneity relates to how one factor is highly correlated to another, albeit not the same. An example of this is how violence consistently creates high levels of IDPs. There is also some debate about whether IDPs cause violence (and if so, suggests a ‘feedback’ mechanism). Including endogenous variables create the same problems are integrating similar variables: it is double counting and should be captured in a factor analysis (but only if that is done within and across indicators). Clearly endogenous variables in the FFP index include ‘elite power struggles’ and multiple manifestations of violence, ‘state legitimacy’ measures and ‘fractionalized elites’ and ‘demographic pressures’ and ‘human flight’. Aggregating sub-indicator assessments within indicators, and indicators within scores violates all basic statistical techniques.

There are further empirical issues: all sub-indicators are compiled on a range from 0-10; the distinctions between each level are relatively vague (for example, moving from 1-2 is based on meager to insignificant; while 9-10 is devastating to catastrophic). These are sometimes associated with the numbers of people affected (100, 1000s, etc.), but few data sources can accurately assess the impact of any of the factors used in the FFP on an annual and immediate basis.

The constituent parameters

In assessing state fragility – taken here to mean the ability of a state to continue to function as a cohesive unit, where even challenged regimes can maintain authority and a basic level of state capacity – surely some attributes are more important than others. For example, ‘state legitimacy’ and ‘security apparatus’ indicators includes the presence of ‘armed insurgents’, ‘suicide bombers’, ‘political assassinations’, ‘paramilitaries’, ‘political violence’ and ‘militias’.

These factors are likely to affect regime stability, state capacity and institutional coherence far more that other indicators including ‘access to information’, ‘access to housing’, ‘the presence of airports’, ‘consumer confidence’, ‘job training’, ‘orphan population.’ While these sub-indicators are important measures of the problems facing poor countries, they do not have the same effects on state fragility. To give such sub-indicators equal weight is both theoretically and empirically questionable and leads to a corrupted index.

There are several open questions about the relevance of each indicator on ‘fragility’ and/or ‘state failure’. Brain drain will not create state fragility, and the role of demographic factors is hotly debated; yet these are both equally weighted to known, consistent factors including state legitimacy, factionalized elites and group grievances.

The result of these problematic measurement decisions is that the index returns quite questionable rankings, wherein Malawi, Zimbabwe and Chad – all countries with significant, but different, problems resulting in relatively low political violence- are regarded as more fragile than Pakistan, Nigeria, and Libya – countries with substantial problems with political violence, institutional instability, inability of the state to provide security for significant parts of the population, and getting progressive worse in recent years.

Is it useful?

Finally, what use is it to rank states? In response to the chronic problems found amongst the most under-developed and conflict-ridden country cases, state fragility research and policy has almost exclusively privileged a state-building and democratization approach. But typical perspectives on state fragility and failure overlook a key point: the ‘anatomy’ of fragility and failure differs substantially across the diverse selection of likely states.

The disparity in causes and categorization make this term relatively meaningless for research or policy; the international response to state fragility is to build the capacity and reach of governing and military institutions; this in turn creates a higher likelihood of state-initiated violence and civilian risk. Indeed, modern African conflict demonstrates how political institutions – mandated and provided for by development aid – often incentivises political elite violence, which now dominates conflict profiles across the continent. Given the centrality of peace and conflict to the post-2015 humanitarian and development agendas, policy makers and researchers must acknowledge both how conflict has changed in developing states, and how institutions incentivise conflict to occur.

AfricaAsiaCivilians At RiskConflict MonitoringCurrent HotspotsGovernanceLocal-Level ViolencePolitical StabilityViolence Against Civilians